Machine Learning

The geometry of neural networks with piecewise linear activation functions.

The Real Tropical Geometry of Neural Networks

A ReLU neural network is an alternating composition of an affine linear function and the ReLU activation function \(max(0,x)\), where this function is applied to a vector coordinate-wise. Any such network is thus a piecewise-linear function, and, in fact, also the converse holds true: Any piecewise linear function can be expressed as a ReLU neural network. Tropial geometry may be thought of as the geomtry of piecewise-linear functions, and so it is natural to study such networks through the lense of tropical geometry. We focus on binary classification tasks: given a finite data set, we seek separate the data into two classes, depening on the sign of the value of the function which is reoresented by the network.

A special case of this setup is the classification by hyperplanes defined by a linear function, where points are classified depending on the side of the hyperplane they lie on. Ranging over all possible classifications of a fixed set of datapoints yields three equivalent combinatorial constructions:

- The parameters of linear functions which induce the same classification form a chamber in a hyperplane arrangement

- the hyperplane arrangement induces the normal fan of a zonotope, i.e., a Minkowski sum of 1-dimensional simplices

- the possible classificatoins correspond to the maximal covectors of a realizable oriented matroid

In [BLM24] we characterize the analogues of these constructions when extending from linear to piecewise linear functions. This yields the following:

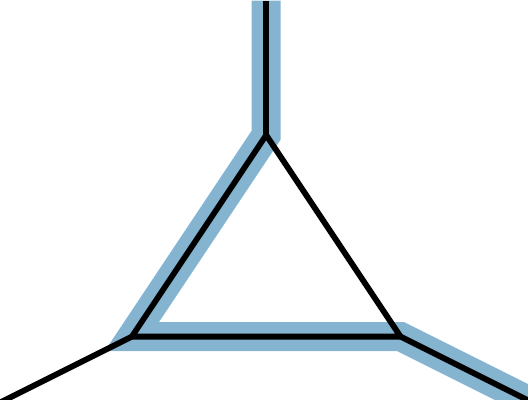

- The parameters of piecewise linear functions form a polyhedral fan in an arrangement of “bent hyperplanes” (which are, in fact, positive tropicalizations of certain hypersurfaces)

- the arrangement induces a normal fan of the activation polytope, which is a Minkowski sum of simplices

- the possible classifications correspond to activation patterns, which are bipartite graphs resembling the covectors of oriented matroids and tropical oriented matroids.

Slides of Talks

| 19 March 2024 | Combinatorial Coworkspace | [Slides] |

| 20 Febraury 2024 | Applied Combinatorics, Algebra, Topology and Statistics Seminar | [Slides] |

| 23 January 2023 | MFO Workshop Discrete Geometry | [Slides] |

| 6 November 2023 | Theoretical Foundations of Deep Learning | [Slides] |

References